HHS Is Making an AI Tool to Create Hypotheses About Vaccine Injury Claims

The U.S. Department of Health and Human Services (HHS) is quietly building a generative artificial intelligence tool designed to scan the nation’s vaccine safety database and automatically generate hypotheses about potential adverse effects from immunizations. The initiative, revealed in an AI use-case inventory released last week, is part of HHS’s broader push to integrate advanced machine learning into public health operations — but it’s already raising alarms among medical experts and ethicists.

According to the inventory, the AI system is still in development and has not yet been deployed. However, the project has been in the works since late 2023, according to a previous year’s AI inventory report. The tool’s purpose is to comb through the Vaccine Adverse Event Reporting System (VAERS), a national database jointly managed by the Centers for Disease Control and Prevention (CDC) and the Food and Drug Administration (FDA), to detect patterns and generate hypotheses about possible vaccine-related side effects.

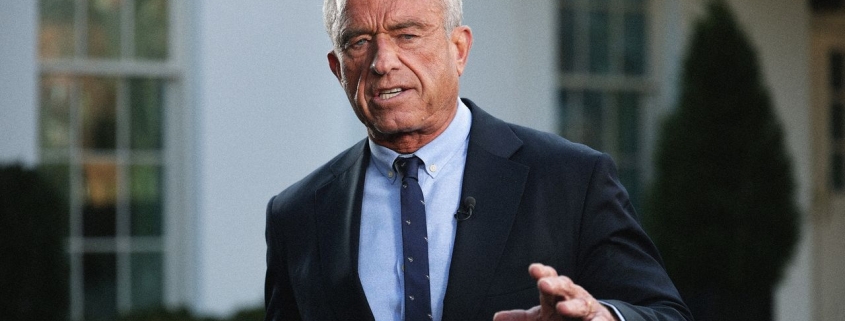

The timing is notable. HHS Secretary Robert F. Kennedy Jr., a longtime vaccine skeptic, has used his position to challenge established vaccine safety protocols. Since taking office, Kennedy has overhauled the childhood immunization schedule, removing recommended shots for several diseases including COVID-19, influenza, hepatitis A and B, meningococcal disease, rotavirus, and respiratory syncytial virus (RSV). He has also called for a complete restructuring of VAERS, claiming the system suppresses information about vaccine side effects.

Kennedy has further proposed changes to the federal Vaccine Injury Compensation Program that could make it easier for individuals to sue for adverse events that haven’t been scientifically linked to vaccines. Critics warn that the AI tool, once operational, could be used to lend an air of technological legitimacy to Kennedy’s anti-vaccine agenda.

“VAERS, at best, was always a hypothesis-generating mechanism,” says Paul Offit, a pediatrician and director of the Vaccine Education Center at Children’s Hospital of Philadelphia. “It’s a noisy system. Anybody can report, and there’s no control group.” Offit, who previously served on the CDC’s Advisory Council on Immunization Practices, emphasizes that VAERS only shows events that occurred after vaccination — not that the vaccine caused them. The CDC’s own website states that a VAERS report does not prove a vaccine caused an adverse event, yet anti-vaccine activists have long misused the data to argue vaccines are unsafe.

Leslie Lenert, former founding director of the CDC’s National Center for Public Health Informatics, notes that government scientists have been using traditional natural language processing AI models to analyze VAERS data for years. The shift toward large language models (LLMs) is a natural evolution, but it comes with significant risks.

One of VAERS’s major limitations is that it lacks denominator data — it doesn’t track how many people received a vaccine, which can make reported events appear more common than they are. Lenert stresses the importance of pairing VAERS data with other sources to accurately assess risk. Moreover, LLMs are notorious for producing convincing but false “hallucinations,” making human oversight essential when interpreting AI-generated hypotheses.

“VAERS is supposed to be very exploratory,” says Lenert, now director of the Center for Biomedical Informatics and Health Artificial Intelligence at Rutgers University. “Some people in the FDA are now treating it as more than exploratory.”

The development of this AI tool comes at a time when trust in public health institutions is fragile, and misinformation about vaccines continues to spread rapidly online. While AI has the potential to enhance public health surveillance, its application in a politically charged environment like vaccine safety requires extreme caution. Without rigorous validation and transparent communication, the tool could easily become another weapon in the arsenal of those seeking to undermine vaccine confidence.

As HHS moves forward with its AI ambitions, the question remains: will this technology be used to strengthen public health, or will it be co-opted to amplify unfounded fears? The answer could have profound consequences for the future of immunization in the United States.

Tags:

HHS #AI #Vaccines #VAERS #RFKJr #PublicHealth #CDC #FDA #MachineLearning #HealthTech #VaccineSafety #MedicalAI #HealthPolicy #DataScience #Immunization #Antivax #TechnologyNews #HealthDepartment #GenerativeAI #LLM #VaccineInjury #PublicHealthSurveillance #CDC #FDA #MedicalResearch #HealthInnovation

Viral Sentences:

- “AI is now scanning vaccine safety data — and RFK Jr. is watching closely.”

- “The same tool meant to protect public health could be twisted to undermine it.”

- “VAERS was never meant to prove causation — but AI might make it look like it does.”

- “Hallucinations in AI could become the next anti-vaccine talking point.”

- “Kennedy’s overhaul of vaccine policy meets the age of generative AI.”

- “From VAERS to AI: The new frontier in vaccine misinformation?”

- “HHS’s AI tool could either save lives or fuel the next health panic.”

- “When public health meets political agendas, even AI can’t stay neutral.”

- “The line between hypothesis generation and fear-mongering is razor-thin.”

- “Generative AI in vaccine safety: Innovation or weaponization?”

,

Leave a Reply

Want to join the discussion?Feel free to contribute!