Early tests suggest ChatGPT Health’s assessment of your fitness data may cause unnecessary panic

OpenAI’s ChatGPT Health Promised AI-Powered Medical Insights—But Early Tests Show It’s Still a Long Way from Reliable Diagnoses

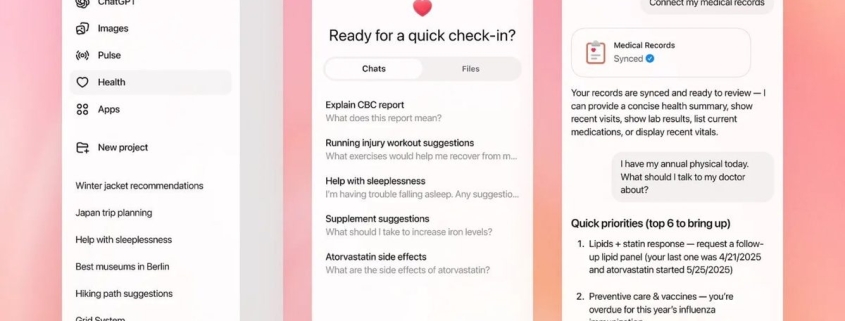

In a bold move earlier this month, OpenAI unveiled a dedicated health-focused space within ChatGPT, marketing it as a safer, smarter way for users to explore sensitive medical questions, track fitness trends, and analyze personal health data. Among its most eye-catching features was the ability to pull in long-term data from popular apps like Apple Health, MyFitnessPal, and Peloton, promising to deliver personalized insights and actionable health trends. But according to new testing by The Washington Post, the reality of ChatGPT Health’s capabilities is far less impressive—and potentially misleading.

When Washington Post technology columnist Geoffrey A. Fowler fed ChatGPT Health a decade’s worth of his Apple Health data, the chatbot delivered a shocking verdict: an F grade for his cardiac health. That’s the kind of result that could send anyone into a panic. But after Fowler shared the assessment with a cardiologist, the doctor called it “baseless,” noting that Fowler’s actual risk for heart disease was extremely low. The disconnect between the AI’s dire warning and the cardiologist’s reassurance raises serious questions about the reliability of these new AI health tools.

Dr. Eric Topol, founder and director of the Scripps Research Translational Institute, didn’t mince words. He told Fowler that ChatGPT Health is “not ready to offer medical advice” and that it leans too heavily on unreliable smartwatch metrics. The F grade, it turns out, was largely based on Apple Watch estimates of VO2 max and heart rate variability—two metrics that, while popular, are known to have significant limitations and can vary widely between devices and software versions. Independent research has repeatedly shown that Apple Watch VO2 max estimates often run low, yet ChatGPT Health treated them as definitive indicators of poor health.

The problems didn’t stop with the initial assessment. When Fowler asked ChatGPT Health to repeat the grading exercise, the results were wildly inconsistent. In different conversations, the chatbot assigned grades ranging from an F to a B, sometimes ignoring recent blood test reports it had access to, and occasionally forgetting basic details like Fowler’s age and gender. Anthropic’s Claude for Healthcare, which also launched this month, showed similar inconsistencies, with grades fluctuating between a C and a B minus for the same data.

Both OpenAI and Anthropic have been careful to emphasize that their tools are not meant to replace doctors and are only intended to provide general context. But the issue is that both chatbots delivered confident, highly personalized evaluations of cardiovascular health—complete with letter grades and specific risk assessments. This combination of authority and inconsistency is a recipe for confusion, and potentially harm. Healthy users could be needlessly scared by dire warnings, while those with genuine health concerns might be falsely reassured by overly optimistic assessments.

The underlying promise of AI in healthcare is tantalizing: the ability to sift through years of personal health data, spot patterns invisible to the human eye, and offer tailored advice that could help people live longer, healthier lives. But early testing suggests that, at least for now, feeding years of fitness tracking data into these tools creates more confusion than clarity. The technology is still prone to errors, inconsistencies, and overreliance on imperfect data sources.

As AI continues to make inroads into healthcare, it’s clear that the industry—and the public—will need to proceed with caution. While the dream of AI-powered medical assistants is compelling, the reality is that these tools are still in their infancy. For now, when it comes to your health, nothing beats a conversation with a real doctor.

Tags:

OpenAI, ChatGPT Health, AI medical assistant, health data analysis, Apple Health, VO2 max, heart rate variability, medical AI, personalized health insights, fitness tracking, digital health, AI diagnostics, health tech, wearable technology, medical advice, cardiologist, Scripps Research Institute, Anthropic Claude, health misinformation, AI limitations, smartwatch health metrics, health trends, fitness apps, Peloton, MyFitnessPal

Viral Sentences:

- “ChatGPT Health gave me an F for my heart—but my doctor says I’m fine.”

- “AI health tools promise the future, but deliver confusion.”

- “Your smartwatch might be lying to ChatGPT.”

- “When AI grades your health, can you trust the score?”

- “The cardiologist called it ‘baseless’—but the AI was confident.”

- “Inconsistent grades, forgotten details: the shaky state of AI health advice.”

- “Don’t panic—your Apple Watch might be wrong.”

- “AI health insights: more noise than signal?”

- “The dream of AI doctors is still a long way off.”

- “Your fitness tracker’s data isn’t as reliable as you think.”

,

Leave a Reply

Want to join the discussion?Feel free to contribute!