Intel Posts 2026 Update For Cache Aware Scheduling On Linux

Intel’s Cache Aware Scheduling Makes a Comeback: Fresh Updates Promise Major Performance Gains for Multi-Cache CPUs

By TechWire Daily

February 14, 2025

A promising Linux kernel enhancement aimed at squeezing more performance from modern multi-cache processors has returned to the spotlight. Intel’s Cache Aware Scheduling (CAS) technology, designed to intelligently colocate tasks sharing data within the same cache domain, has just received its first major update of 2025. The latest patches, posted for community review, build upon the v2 updates released in December and signal a renewed push toward mainline kernel integration—potentially as soon as 2026.

What Is Cache Aware Scheduling?

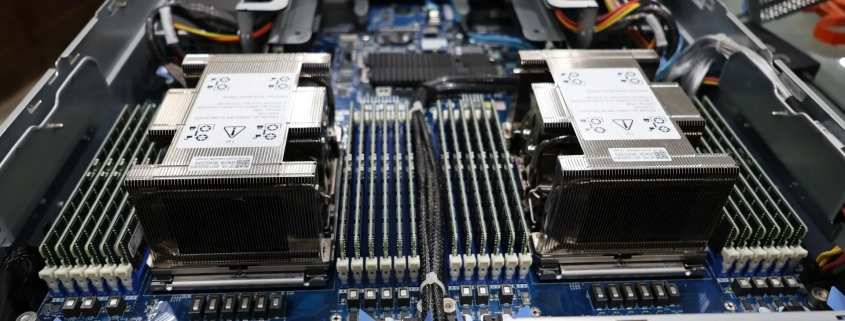

Modern CPUs, especially those in servers and high-performance workstations, often feature multiple cache domains—each with its own Last Level Cache (LLC). When tasks running on different cores access the same data, inefficient scheduling can cause that data to bounce between caches, degrading performance through increased latency and cache misses. CAS aims to solve this by grouping tasks that share data onto cores within the same cache domain, improving cache locality and reducing costly cross-domain transfers.

Intel originally developed CAS with its Xeon processors in mind, but recent Phoronix testing revealed that AMD EPYC CPUs also see significant gains under the same scheduling logic—an encouraging sign for cross-platform adoption.

What’s New in v3?

The latest v3 patchset, now re-based against Linux 6.19, introduces several refinements:

• Smarter fallback behavior: After repeated load-balancing failures, the scheduler will now skip CAS-specific behavior to avoid degrading performance in edge cases.

• Reduced sorting overhead: Previously costly sorting operations have been optimized to minimize scheduler latency.

• Per-CPU LLC accounting: The number of tasks preferring each LLC is now tracked at the lowest-level scheduling domain, improving accuracy without global overhead.

• General cleanup: Various code refinements and bug fixes improve stability and maintainability.

These changes address feedback from earlier reviews and improve the patchset’s readiness for upstream consideration.

Benchmarks Show Real-World Impact

Phoronix’s independent testing has already demonstrated CAS’s potential:

• On Intel Xeon 6 Granite Rapids servers, CAS delivered measurable throughput and latency improvements in synthetic and real-world workloads.

• On AMD EPYC Turin processors, gains were even more pronounced in certain multi-threaded scenarios, with reductions in cache miss rates and better core utilization.

These results suggest CAS could become a key optimization for data centers, cloud providers, and anyone running latency-sensitive, cache-heavy applications.

What’s Next?

The v3 patches are now available on the Linux kernel mailing list for review. If the community response is positive, CAS could be queued for the 6.8 merge window later this year, with a longer-term goal of landing in the mainline kernel by 2026.

For Linux users eager to test the feature today, the patches can be applied to a 6.19-based system. However, as with any out-of-tree patchset, expect some instability and the need for manual compilation.

Why This Matters

As CPUs continue to scale in core count and cache complexity, intelligent scheduling will be critical to unlocking their full potential. Intel’s Cache Aware Scheduling represents a pragmatic, hardware-aware approach to an age-old problem—one that could deliver tangible benefits across the entire Linux ecosystem.

#CacheAwareScheduling #LinuxKernel #Intel #AMD #EPYC #ServerPerformance #CPUOptimization #KernelDevelopment #TechNews #PerformanceBoost #DataCenterTech #Linux6.19 #Phoronix #2025Tech #ServerHardware #MultiCoreProcessing #CacheOptimization #OpenSourceInnovation #NextGenCPUs #KernelPatch #HighPerformanceComputing,

Leave a Reply

Want to join the discussion?Feel free to contribute!